I am using community v3.12.1.2 with 14 nodes. Each 64 GB RAM and 24 cores. My server config:

service {

user root

group root

paxos-single-replica-limit 1 # Number of nodes where the replica count is automatically reduced to 1.

pidfile /var/run/aerospike/asd.pid

#service-threads 24

#transaction-queues 24

transaction-threads-per-queue 4

proto-fd-max 30000

transaction-pending-limit 20

auto-pin cpu

}

logging {

# Log file must be an absolute path.

file /var/log/aerospike/aerospike.log {

context any info

}

}

network {

service {

address bond0

port 3000

access-address bond0

}

heartbeat {

# mode multicast

# address 224.0.0.116 # 239.1.99.222

# port 9918

mode mesh

port 3002

mesh-seed-address-port 10.56.101.184 3002 #a1

# followed by 13 more machines

#mesh-port 3002

# To use unicast-mesh heartbeats, remove the 3 lines above, and see

# aerospike_mesh.conf for alternative.

interval 150

timeout 10

}

fabric {

address bond0

port 3001

}

info {

port 3003

}

}

namespace test {

replication-factor 2

memory-size 1G

default-ttl 30d # 30 days, use 0 to never expire/evict.

storage-engine memory

}

namespace production {

replication-factor 2

memory-size 59G

default-ttl 0 # 30 days, use 0 to never expire/evict.

high-water-disk-pct 50 # How full may the disk become before the

# server begins eviction (expiring records

# early)

high-water-memory-pct 85 # How full may the memory become before the

# server begins eviction (expiring records

# early)

stop-writes-pct 90 # How full may the memory become before

# we disallow new writes

# storage-engine memory

storage-engine device {

#device /dev/sdb1

#data-in-memory false

file /opt/aerospike/data/production.data

filesize 1000G # 8 times of RAM

data-in-memory true

#write-block-size 128K # adjust block size to make it efficient for SSDs.

# largwst size of any object

}

}

Server settings:

$ asinfo -h localhost -v “get-config:context=service”

paxos-single-replica-limit=1;pidfile=/var/run/aerospike/asd.pid;proto-fd-max=30000;advertise-ipv6=false;batch-threads=24;batch-max-buffers-per-queue=255;batch-max-requests=5000;batch-max-unused-buffers=256;batch-priority=200;batch-index-threads=4;clock-skew-max-ms=1000;cluster-name=null;enable-benchmarks-fabric=false;enable-benchmarks-svc=false;enable-hist-info=false;hist-track-back=300;hist-track-slice=10;hist-track-thresholds=null;info-threads=16;ldt-benchmarks=false;log-local-time=false;migrate-max-num-incoming=4;migrate-threads=1;node-id-interface=null;nsup-delete-sleep=100;nsup-period=120;nsup-startup-evict=true;paxos-max-cluster-size=32;paxos-protocol=v3;paxos-recovery-policy=auto-reset-master;paxos-retransmit-period=5;proto-fd-idle-ms=60000;proto-slow-netio-sleep-ms=1;query-batch-size=100;query-buf-size=2097152;query-bufpool-size=256;query-in-transaction-thread=false;query-long-q-max-size=500;query-microbenchmark=false;query-pre-reserve-partitions=false;query-priority=10;query-priority-sleep-us=1;query-rec-count-bound=18446744073709551615;query-req-in-query-thread=false;query-req-max-inflight=100;query-short-q-max-size=500;query-threads=6;query-threshold=10;query-untracked-time-ms=1000;query-worker-threads=15;respond-client-on-master-completion=false;run-as-daemon=true;scan-max-active=100;scan-max-done=100;scan-max-udf-transactions=32;scan-threads=4;service-threads=24;sindex-builder-threads=4;sindex-gc-enable-histogram=false;ticker-interval=10;transaction-max-ms=1000;transaction-pending-limit=0;transaction-queues=4;transaction-repeatable-read=false;transaction-retry-ms=1002;transaction-threads-per-queue=4;work-directory=/opt/aerospike;write-duplicate-resolution-disable=false;fabric-dump-msgs=false;max-msgs-per-type=-1;memory-accounting=false;prole-extra-ttl=0;non-master-sets-delete=false

I changed transaction-pending-limit to 0 because i was getting lot of HOT KEY error.

Aerospike Log with enable-benchmarks-write=true:

Oct 12 2017 13:23:03 GMT: INFO (info): (ticker.c:170) NODE-ID bb9e047fb902500 CLUSTER-SIZE 14

Oct 12 2017 13:23:03 GMT: INFO (info): (ticker.c:253) system-memory: free-kbytes 4714768 free-pct 7 heap-kbytes (57819204,61686548,64284672) heap-efficiency-pct 89.9

Oct 12 2017 13:23:03 GMT: INFO (info): (ticker.c:267) in-progress: tsvc-q 0 info-q 0 nsup-delete-q 0 rw-hash 1 proxy-hash 0 tree-gc-q 0

Oct 12 2017 13:23:03 GMT: INFO (info): (ticker.c:289) fds: proto (1861,206933439,206931578) heartbeat (13,351,338) fabric (312,1395,1083)

Oct 12 2017 13:23:03 GMT: INFO (info): (ticker.c:298) heartbeat-received: self 0 foreign 154602263

Oct 12 2017 13:23:03 GMT: INFO (info): (ticker.c:328) fabric-bytes-per-second: bulk (0,0) ctrl (0,0) meta (0,0) rw (1971357,1929931)

Oct 12 2017 13:23:03 GMT: INFO (info): (ticker.c:352) early-fail: demarshal 0 tsvc-client 14 tsvc-batch-sub 0 tsvc-udf-sub 0

Oct 12 2017 13:23:03 GMT: INFO (info): (ticker.c:369) batch-index: batches (712022,0,84)

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:145) histogram dump: batch-index (712022 total) msec

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:162) (00: 0000122597) (01: 0000557767) (02: 0000030778) (03: 0000000359)

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:162) (04: 0000000159) (05: 0000000088) (06: 0000000050) (07: 0000000044)

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:171) (08: 0000000047) (09: 0000000052) (10: 0000000081)

Oct 12 2017 13:23:03 GMT: INFO (info): (ticker.c:382) {test} objects: all 0 master 0 prole 0

Oct 12 2017 13:23:03 GMT: INFO (info): (ticker.c:443) {test} migrations: complete

Oct 12 2017 13:23:03 GMT: INFO (info): (ticker.c:462) {test} memory-usage: total-bytes 0 index-bytes 0 sindex-bytes 0 data-bytes 0 used-pct 0.00

Oct 12 2017 13:23:03 GMT: INFO (info): (ticker.c:382) {production} objects: all 259531214 master 131518951 prole 128012263

Oct 12 2017 13:23:03 GMT: INFO (info): (ticker.c:443) {production} migrations: complete

Oct 12 2017 13:23:03 GMT: INFO (info): (ticker.c:462) {production} memory-usage: total-bytes 49129298243 index-bytes 16609997696 sindex-bytes 10965855 data-bytes 32508334692 used-pct 77.55

Oct 12 2017 13:23:03 GMT: INFO (info): (ticker.c:492) {production} device-usage: used-bytes 73219840256 avail-pct 89

Oct 12 2017 13:23:03 GMT: INFO (info): (ticker.c:585) {production} client: tsvc (0,15290) proxy (505,0,9) read (2,0,0,0) write (111483291,12,2002) delete (0,0,0,0) udf (2424680314,7628358,55239) lang (189549,2422358178,2187475,2314437)

Oct 12 2017 13:23:03 GMT: INFO (info): (ticker.c:613) {production} batch-sub: tsvc (0,19540) proxy (114,0,0) read (180258099,0,0,67570463)

Oct 12 2017 13:23:03 GMT: INFO (info): (ticker.c:641) {production} scan: basic (4,32,0) aggr (0,0,0) udf-bg (0,0,0)

Oct 12 2017 13:23:03 GMT: INFO (info): (ticker.c:666) {production} query: basic (9222787,0) aggr (0,0) udf-bg (0,0)

Oct 12 2017 13:23:03 GMT: INFO (info): (ticker.c:735) {production} retransmits: migration 5727948 client-read 0 client-write (0,0) client-delete (0,0) client-udf (0,0) batch-sub 0 udf-sub (0,0) nsup 0

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:145) histogram dump: {production}-read (2 total) msec

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:171) (00: 0000000002)

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:145) histogram dump: {production}-write (111483303 total) msec

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:162) (00: 0111163416) (01: 0000156529) (02: 0000055374) (03: 0000039086)

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:162) (04: 0000032302) (05: 0000016204) (06: 0000006511) (07: 0000003644)

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:162) (08: 0000003405) (09: 0000003169) (10: 0000002968) (11: 0000000653)

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:171) (12: 0000000041) (13: 0000000001)

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:145) histogram dump: {production}-write-start (34190 total) msec

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:162) (00: 0000033229) (01: 0000000405) (02: 0000000360) (03: 0000000169)

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:171) (04: 0000000025) (05: 0000000002)

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:145) histogram dump: {production}-write-restart (0 total) msec

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:145) histogram dump: {production}-write-dup-res (0 total) msec

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:145) histogram dump: {production}-write-master (34190 total) msec

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:162) (00: 0000034123) (01: 0000000004) (02: 0000000012) (03: 0000000015)

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:162) (04: 0000000014) (05: 0000000011) (06: 0000000004) (08: 0000000002)

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:171) (09: 0000000001) (11: 0000000004)

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:145) histogram dump: {production}-write-repl-write (34172 total) msec

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:162) (00: 0000034080) (01: 0000000006) (02: 0000000005) (03: 0000000008)

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:162) (04: 0000000006) (05: 0000000014) (06: 0000000011) (07: 0000000007)

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:171) (08: 0000000004) (09: 0000000015) (10: 0000000016)

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:145) histogram dump: {production}-write-response (34172 total) msec

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:171) (00: 0000034172)

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:145) histogram dump: {production}-udf (2432308672 total) msec

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:162) (00: 2399887009) (01: 0006768612) (02: 0006740875) (03: 0010336102)

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:162) (04: 0006929781) (05: 0000970993) (06: 0000232649) (07: 0000141963)

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:162) (08: 0000104393) (09: 0000085639) (10: 0000088441) (11: 0000019321)

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:171) (12: 0000002828) (13: 0000000066)

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:145) histogram dump: {production}-query (9222787 total) msec

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:162) (00: 0005279450) (01: 0003932800) (02: 0000004531) (03: 0000001490)

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:162) (04: 0000001653) (05: 0000001141) (06: 0000000362) (07: 0000000259)

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:162) (08: 0000000224) (09: 0000000240) (10: 0000000303) (11: 0000000303)

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:171) (12: 0000000025) (13: 0000000006)

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:145) histogram dump: {production}-query-rec-count (9198773 total) count

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:162) (01: 0000000142) (02: 0000000456) (03: 0000002995) (04: 0000015958)

Oct 12 2017 13:23:03 GMT: INFO (info): (hist.c:162) (05: 0000276484) (06: 0002762003) (07: 0004584236) (08: 0001556499)

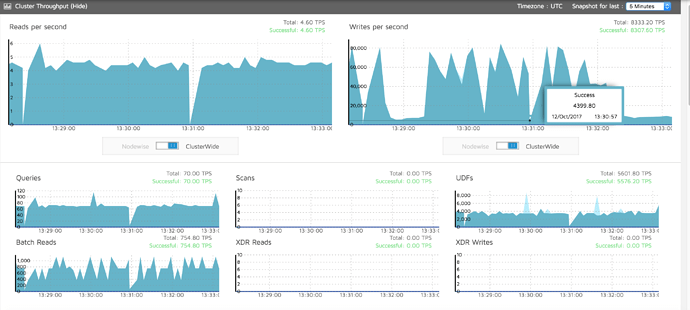

I am doing mostly UDF and writes:

The batch Reads Graph seems incorrect. We are sending ~160-180K/s batch read (not batch index) with each batch of size 6-8.

Aslatency stats:

Write

$ asloglatency -h {production}-write

{production}-write

Oct 12 2017 13:17:33

% > (ms)

slice-to (sec) 1 8 64 ops/sec

-------------- ------ ------ ------ ----------

13:17:43 10 3.34 0.35 0.00 575.7

13:17:53 10 4.13 0.49 0.04 525.5

13:18:03 10 5.63 0.97 0.35 692.6

13:18:13 10 6.24 0.25 0.05 5274.9

13:18:23 10 7.22 0.31 0.03 4861.1

13:18:33 10 6.02 0.23 0.01 4919.3

13:18:43 10 4.99 0.58 0.28 2721.2

13:18:53 10 2.58 0.30 0.04 4702.2

13:19:03 10 1.24 0.23 0.02 4445.3

13:19:13 10 2.11 0.39 0.15 4118.8

13:19:23 10 2.33 0.29 0.06 3332.7

13:19:33 10 2.82 0.26 0.01 4788.3

13:19:43 10 5.26 0.47 0.15 4391.4

13:19:53 10 9.24 0.30 0.02 3250.9

13:20:03 10 5.47 0.23 0.01 5391.2

13:20:13 10 1.65 0.22 0.02 5183.2

13:20:23 10 2.89 0.70 0.18 3563.4

13:20:33 10 2.27 0.71 0.06 4096.6

13:20:43 10 0.90 0.31 0.13 4155.1

13:20:53 10 1.95 0.30 0.12 4138.9

13:21:03 10 1.49 0.24 0.02 3078.7

13:21:13 10 0.80 0.23 0.07 5597.5

13:21:23 10 1.27 0.24 0.06 4628.2

13:21:33 10 1.69 0.23 0.05 3684.2

13:21:43 10 1.79 0.21 0.03 4623.0

13:21:53 10 2.17 0.17 0.01 5499.3

13:22:03 10 3.26 0.32 0.12 3607.9

13:22:13 10 0.75 0.20 0.02 4152.4

-------------- ------ ------ ------ ----------

avg 3.27 0.35 0.08 3928.0

max 9.24 0.97 0.35 5597.5

UDF:

$ asloglatency -N production -h udf

{production}-udf

Oct 12 2017 13:17:26

% > (ms)

slice-to (sec) 1 8 64 ops/sec

-------------- ------ ------ ------ ----------

13:17:36 10 3.46 0.04 0.00 248.3

13:17:46 10 4.92 0.00 0.00 256.1

13:17:56 10 2.82 0.00 0.00 254.9

13:18:06 10 2.03 0.00 0.00 286.1

13:18:16 10 2.69 0.08 0.00 238.1

13:18:26 10 1.19 0.26 0.00 151.4

13:18:36 10 0.49 0.37 0.12 81.5

13:18:46 10 0.35 0.00 0.00 86.2

13:18:56 10 0.89 0.05 0.00 201.3

13:19:06 10 1.24 0.31 0.27 257.1

13:19:16 10 1.61 1.17 1.02 136.6

13:19:26 10 1.60 0.04 0.04 243.8

13:19:36 10 2.06 0.00 0.00 238.4

13:19:46 10 1.44 0.22 0.22 277.4

13:19:56 10 2.19 1.06 1.06 123.1

13:20:06 10 0.86 0.00 0.00 256.3

13:20:16 10 0.50 0.00 0.00 238.1

13:20:26 10 0.91 0.50 0.05 198.7

13:20:36 10 0.96 0.46 0.41 217.8

13:20:46 10 1.10 0.44 0.44 226.6

13:20:56 10 1.43 0.00 0.00 168.1

13:21:06 10 1.03 0.05 0.00 194.2

13:21:16 10 1.14 0.05 0.05 218.8

13:21:26 10 0.62 0.00 0.00 193.6

13:21:36 10 1.70 0.15 0.00 194.3

13:21:46 10 1.63 0.10 0.10 208.5

13:21:56 10 1.98 0.00 0.00 212.3

13:22:06 10 0.89 0.38 0.38 212.7

13:22:16 10 1.25 0.26 0.10 192.0

13:22:26 10 1.13 0.05 0.00 212.3

13:22:36 10 1.35 0.00 0.00 191.9

13:22:46 10 2.85 0.47 0.41 193.0

13:22:56 10 0.99 0.25 0.25 905.1

I have 14 client (GoLang) machine each have 2 different process. One batch read (~6-8 batch size each) and one writes. There are 3 separate machine (GoLang) for UDF.

I am seeing latency for batch read on client machine is high. I am assuming my cluster should easily handle 50-80k/s writes along with 4-5K/s UDF and 1-2K/s batch read. But even on 5-10K writes , latency on client machine for batch read exceeds ~100ms. I am not able to identify reason of slow performance ? I tried increasing batch-threads to 24 but no improvement ![]()

Htop:

I have enabled logging on Go_client as well.

$ cat /var/log/supervisord-avatar.out* | grep '2017/10/13'

2017/10/13 08:08:32 Connection to address `10.124.117.88:3000` failed to establish with error: dial tcp 10.124.117.88:3000: i/o timeout

2017/10/13 08:08:32 Node BB90857FB902500 10.124.117.88:3000: dial tcp 10.124.117.88:3000: i/o timeout

2017/10/13 08:08:32 Connection to address `10.124.117.88:3000` failed to establish with error: dial tcp 10.124.117.88:3000: i/o timeout

2017/10/13 08:08:32 Node BB90857FB902500 10.124.117.88:3000: dial tcp 10.124.117.88:3000: i/o timeout

2017/10/13 08:08:33 Connection to address `10.124.117.88:3000` failed to establish with error: dial tcp 10.124.117.88:3000: i/o timeout

2017/10/13 08:08:33 Node BB90857FB902500 10.124.117.88:3000: dial tcp 10.124.117.88:3000: i/o timeout

2017/10/13 08:08:33 Connection to address `10.124.117.88:3000` failed to establish with error: dial tcp 10.124.117.88:3000: i/o timeout

2017/10/13 08:08:33 Node BB90857FB902500 10.124.117.88:3000: dial tcp 10.124.117.88:3000: i/o timeout

2017/10/13 07:19:51 Tending took 1.10061868s, while your requested ClientPolicy.TendInterval is 1s. Tends are slower than the interval, and may be falling behind the changes in the cluster.

2017/10/13 07:19:53 Node BB9A4083A7AC40C 10.57.115.42:3000: EOF

2017/10/13 07:19:53 Node BB9A4083A7AC40C 10.57.115.42:3000: EOF

2017/10/13 07:19:53 Node BB9A4083A7AC40C 10.57.115.42:3000: EOF

2017/10/13 07:19:53 Node BB9A4083A7AC40C 10.57.115.42:3000: EOF

2017/10/13 07:19:53 Node BB9A4083A7AC40C 10.57.115.42:3000: EOF

2017/10/13 07:19:53 Node BB9A4083A7AC40C 10.57.115.42:3000: EOF

2017/10/13 07:19:53 Node BB9A4083A7AC40C 10.57.115.42:3000: EOF

2017/10/13 07:19:53 Node BB9A4083A7AC40C 10.57.115.42:3000: EOF

2017/10/13 07:19:53 Node BB9A4083A7AC40C 10.57.115.42:3000: EOF

2017/10/13 07:19:53 Node BB9A4083A7AC40C 10.57.115.42:3000: EOF

2017/10/13 07:19:53 Node BB9A4083A7AC40C 10.57.115.42:3000: EOF

2017/10/13 07:19:54 Node BB9A4083A7AC40C 10.57.115.42:3000: EOF

2017/10/13 07:19:54 Node BB9A4083A7AC40C 10.57.115.42:3000: EOF

2017/10/13 07:19:54 Node BB9A4083A7AC40C 10.57.115.42:3000: EOF

2017/10/13 07:19:54 Node BB9A4083A7AC40C 10.57.115.42:3000: EOF

2017/10/13 07:19:54 Node BB9A4083A7AC40C 10.57.115.42:3000: EOF

2017/10/13 07:31:42 Tending took 1.257162318s, while your requested ClientPolicy.TendInterval is 1s. Tends are slower than the interval, and may be falling behind the changes in the cluster.

2017/10/13 07:41:52 Tending took 1.282660632s, while your requested ClientPolicy.TendInterval is 1s. Tends are slower than the interval, and may be falling behind the changes in the cluster.

2017/10/13 07:04:41 No connections available; seeding...

2017/10/13 07:04:41 Seeding the cluster. Seeds count: 10

2017/10/13 07:04:41 Node BB97CD1657AC40C partition generation -2 changed to 2462

2017/10/13 07:04:41 Node BB9E0D0657AC40C partition generation -2 changed to 2757

2017/10/13 07:04:41 Tend finished. Live node count changes from 0 to 7

2017/10/13 07:04:41 Node BB9D4D0657AC40C partition generation -2 changed to 1234

2017/10/13 07:04:41 Node BB97858FB902500 partition generation -2 changed to 3747

2017/10/13 07:04:41 Node BB90857FB902500 partition generation -2 changed to 1268

2017/10/13 07:04:41 Node BB930D0657AC40C partition generation -2 changed to 3280

2017/10/13 07:04:41 Node BB940BFFA902500 partition generation -2 changed to 3593

2017/10/13 07:04:41 Tend finished. Live node count changes from 7 to 14

2017/10/13 07:04:41 Node BB9F0D0657AC40C partition generation -2 changed to 2859

2017/10/13 07:04:41 Node BB9E047FB902500 partition generation -2 changed to 3206

2017/10/13 07:04:41 Node BB9A4083A7AC40C partition generation -2 changed to 2856

2017/10/13 07:04:41 Node BB97CC4FA902500 partition generation -2 changed to 2886

2017/10/13 07:04:41 Node BB9E88A637AC40C partition generation -2 changed to 3207

2017/10/13 07:04:41 Node BB95CD5FA902500 partition generation -2 changed to 3755

2017/10/13 07:04:41 Node BB99C083A7AC40C partition generation -2 changed to 1811

2017/10/13 07:04:44 Connection to address `10.57.115.91:3000` failed to establish with error: dial tcp 10.57.115.91:3000: getsockopt: no route to host

2017/10/13 07:04:44 Seed 10.57.115.91:3000 failed: dial tcp 10.57.115.91:3000: getsockopt: no route to host

2017/10/13 07:06:32 No connections available; seeding...

2017/10/13 07:06:32 Seeding the cluster. Seeds count: 10

2017/10/13 07:06:32 Node BB99C083A7AC40C partition generation -2 changed to 1811

2017/10/13 07:06:32 Node BB940BFFA902500 partition generation -2 changed to 3593

2017/10/13 07:06:32 Node BB930D0657AC40C partition generation -2 changed to 3280

2017/10/13 07:06:32 Node BB9D4D0657AC40C partition generation -2 changed to 1234

2017/10/13 07:06:32 Node BB97858FB902500 partition generation -2 changed to 3747

2017/10/13 07:06:32 Node BB90857FB902500 partition generation -2 changed to 1268

2017/10/13 07:06:32 Tend finished. Live node count changes from 0 to 6

2017/10/13 07:06:32 Tend finished. Live node count changes from 6 to 14

2017/10/13 07:06:32 Node BB9A4083A7AC40C partition generation -2 changed to 2856

2017/10/13 07:06:32 Node BB95CD5FA902500 partition generation -2 changed to 3755

2017/10/13 07:06:32 Node BB9F0D0657AC40C partition generation -2 changed to 2859

2017/10/13 07:06:32 Node BB9E88A637AC40C partition generation -2 changed to 3207

2017/10/13 07:06:32 Node BB9E0D0657AC40C partition generation -2 changed to 2757

2017/10/13 07:06:32 Node BB9E047FB902500 partition generation -2 changed to 3206

2017/10/13 07:06:32 Node BB97CD1657AC40C partition generation -2 changed to 2462

2017/10/13 07:06:32 Node BB97CC4FA902500 partition generation -2 changed to 2886

2017/10/13 07:06:35 Connection to address `10.57.115.91:3000` failed to establish with error: dial tcp 10.57.115.91:3000: getsockopt: no route to host

2017/10/13 07:06:35 Seed 10.57.115.91:3000 failed: dial tcp 10.57.115.91:3000: getsockopt: no route to host

2017/10/13 07:12:03 No connections available; seeding...

2017/10/13 07:12:03 Seeding the cluster. Seeds count: 10

2017/10/13 07:12:03 Node BB9E0D0657AC40C partition generation -2 changed to 2757

2017/10/13 07:12:03 Node BB930D0657AC40C partition generation -2 changed to 3280

2017/10/13 07:12:03 Node BB9D4D0657AC40C partition generation -2 changed to 1234

2017/10/13 07:12:03 Node BB940BFFA902500 partition generation -2 changed to 3593

2017/10/13 07:12:03 Node BB97858FB902500 partition generation -2 changed to 3747

2017/10/13 07:12:03 Node BB90857FB902500 partition generation -2 changed to 1268

2017/10/13 07:12:03 Tend finished. Live node count changes from 0 to 6

2017/10/13 07:12:03 Tend finished. Live node count changes from 6 to 14

2017/10/13 07:12:03 Node BB9A4083A7AC40C partition generation -2 changed to 2856

2017/10/13 07:12:03 Node BB97CD1657AC40C partition generation -2 changed to 2462

2017/10/13 07:12:03 Node BB9E88A637AC40C partition generation -2 changed to 3207

2017/10/13 07:12:03 Node BB95CD5FA902500 partition generation -2 changed to 3755

2017/10/13 07:12:03 Node BB9E047FB902500 partition generation -2 changed to 3206

2017/10/13 07:12:03 Node BB97CC4FA902500 partition generation -2 changed to 2886

2017/10/13 07:12:03 Node BB9F0D0657AC40C partition generation -2 changed to 2859

2017/10/13 07:12:03 Node BB99C083A7AC40C partition generation -2 changed to 1811

2017/10/13 07:12:06 Connection to address `10.57.115.91:3000` failed to establish with error: dial tcp 10.57.115.91:3000: getsockopt: no route to host

2017/10/13 07:12:06 Seed 10.57.115.91:3000 failed: dial tcp 10.57.115.91:3000: getsockopt: no route to host

2017/10/13 09:02:32 Node BB90857FB902500 10.124.117.88:3000: No available connections to the node. Connection Pool was empty, and limited to certain number of connections.

2017/10/13 09:02:32 Node BB90857FB902500 10.124.117.88:3000: No available connections to the node. Connection Pool was empty, and limited to certain number of connections.

2017/10/13 09:02:32 Node BB90857FB902500 10.124.117.88:3000: No available connections to the node. Connection Pool was empty, and limited to certain number of connections.

2017/10/13 09:02:32 Node BB90857FB902500 10.124.117.88:3000: No available connections to the node. Connection Pool was empty, and limited to certain number of connections.

2017/10/13 09:02:32 Node BB90857FB902500 10.124.117.88:3000: No available connections to the node. Connection Pool was empty, and limited to certain number of connections.

2017/10/13 09:02:32 Node BB90857FB902500 10.124.117.88:3000: No available connections to the node. Connection Pool was empty, and limited to certain number of connections.

2017/10/13 09:02:32 Node BB90857FB902500 10.124.117.88:3000: No available connections to the node. Connection Pool was empty, and limited to certain number of connections.

2017/10/13 09:02:32 Node BB90857FB902500 10.124.117.88:3000: No available connections to the node. Connection Pool was empty, and limited to certain number of connections.

2017/10/13 09:02:32 Node BB90857FB902500 10.124.117.88:3000: No available connections to the node. Connection Pool was empty, and limited to certain number of connections.

2017/10/13 09:02:32 Node BB90857FB902500 10.124.117.88:3000: No available connections to the node. Connection Pool was empty, and limited to certain number of connections.

How can i debug it? Or my expectations are too high ?